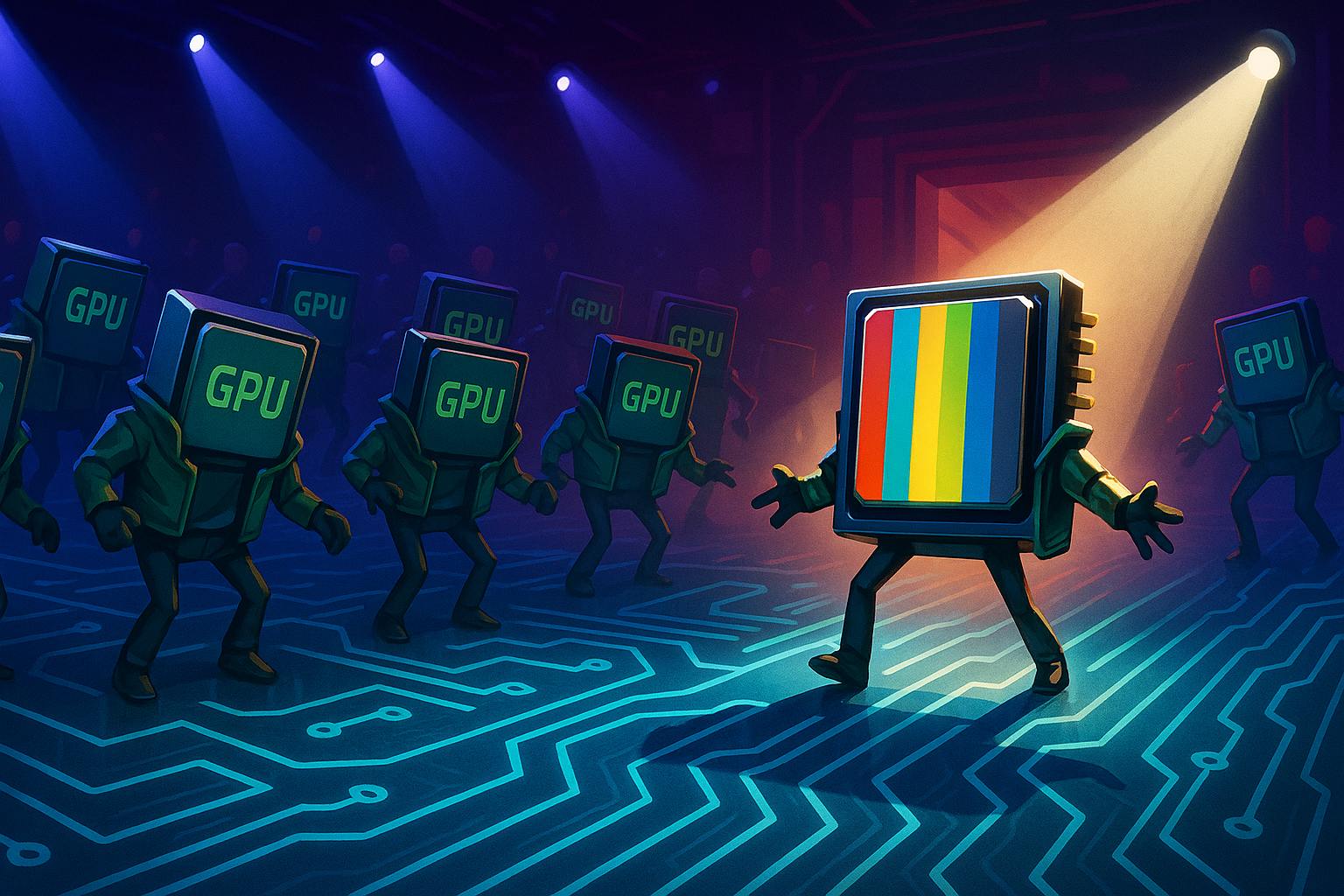

When Google revealed plans to sell its Tensor Processing Units (TPUs) outside its own cloud ecosystem, I couldn't help but see it as the tech equivalent of crashing a party where Nvidia's been the only one dancing.

For years, Google kept these AI chips locked within its own digital walls—a closely guarded asset that customers could only access by renting time in Google's cloud. Now? They're apparently ready to kick down the door to Nvidia's dominance with all the subtlety of a Silicon Valley billionaire at a garage sale.

The Information broke the story that Google isn't just thinking about this move—they're in actual talks with Meta for what could be a multi-billion dollar chip deal starting in 2027. Meta. Facebook's parent company. The folks currently hemorrhaging cash on Nvidia hardware while trying to convince everyone they're an AI pioneer too.

Makes perfect sense, doesn't it?

Look, the timing here is no accident. We're watching Nvidia enjoy a moment of market dominance that's turned Jensen Huang's leather jacket into something approaching a religious artifact for tech investors. Google's essentially sidling up to potential customers with a whispered, "Hey... wanna try something different?"

I've been covering the chip wars since before most people knew there was a war to be fought. What's happening now reminds me of classic competitive strategy—don't attack the strongest part of your opponent's position. Nvidia's built an empire with its GPUs, but more importantly, with CUDA, its software platform that's become the industry standard.

That software is Nvidia's moat, and it's wide and deep. Developers know CUDA. Companies have invested millions in CUDA-optimized code. Switching costs are enormous.

So what's Google doing? They're not trying to convince everyone to abandon Nvidia (they're not delusional). They're targeting high-value players who have both the technical chops and financial motivation to make the jump. Meta fits that bill perfectly—they're spending astronomical sums on AI hardware while desperately trying to establish themselves as an AI leader.

The numbers being thrown around are... ambitious. Google executives reportedly think they could capture up to 10% of Nvidia's annual revenue. Given Nvidia's current trajectory, that's like announcing you're going to take a slice of Fort Knox.

(Though I suppose stranger things have happened in tech.)

What makes this particularly fascinating is watching Google's famous "we'll build it ourselves" philosophy evolve into "and now you can build with it too." This is the company that decided standard data centers weren't good enough, that off-the-shelf servers wouldn't cut it, that commercially available chips weren't optimal—and now they're turning their customized approach into a product strategy.

The market's initial reaction was telling but measured. Google's stock rose 2.1% after hours, while Nvidia dipped 1.8%—the equivalent of Jensen finding some pocket lint in that famous jacket. Investors see this as interesting but not revolutionary... at least not yet.

There's a beautiful game theory element at play. Even if Meta never fully commits to Google's TPUs, the very existence of a credible alternative gives them leverage with Nvidia. "These TPU specs sure look interesting... maybe we should reconsider our next GPU order..."

But here's where the rubber meets the road—or should I say, where the silicon meets the circuit board? Google can't just be "not-Nvidia" to succeed. TPUs need compelling advantages in performance, power efficiency, and that thorniest of challenges: software compatibility.

The broader story here is about control. As AI becomes the defining technology of our era, whoever controls the computing resources needed to train and run these increasingly massive models holds tremendous power. Google, with its vast AI research operation and seemingly bottomless pockets, was never going to be content playing second fiddle in this orchestra.

Will this be remembered as a brilliant strategic pivot or an expensive distraction? Too early to tell. But as opening salvos go in the next phase of the AI chip wars, it's certainly got people talking.

And somewhere, I imagine Jensen Huang is either completely unfazed... or shopping for an even more intimidating leather jacket. Just in case.