Alibaba Cloud dropped a bombshell this week that's either terrifying or thrilling depending on which side of the GPU shortage you're sitting on: they've figured out how to slash Nvidia GPU usage by a staggering 82% through a clever pooling system.

In a tech world absolutely parched for AI chips, this is like someone showing up to a desert with a water multiplication machine.

The GPU shortage has become tech's version of concert tickets for Taylor Swift's Eras Tour—impossibly scarce, wildly expensive, and creating a secondary market that makes scalpers blush. We're talking about H100 chips that officially cost around $30,000 but are trading for double that in some corners of the market. I've spoken with startup founders who described the procurement process as "soul-crushing."

What's fascinating about Alibaba's approach isn't that it's revolutionary—it's that it's actually kind of... obvious? In a good way.

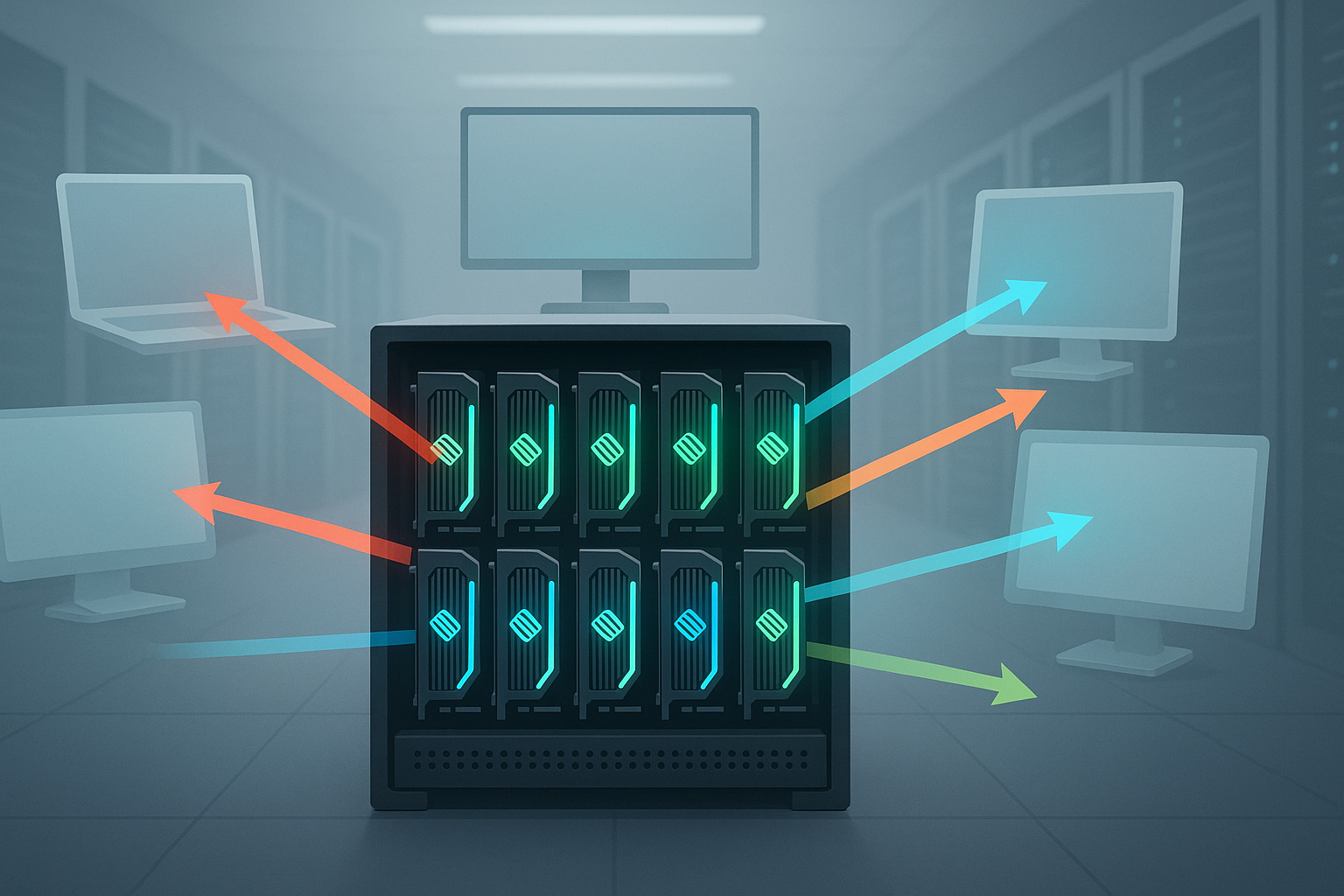

Their "GPU Pool" system is essentially bringing time-sharing back from computing's ancient history. Remember mainframes? Those room-sized computers that multiple users would access simultaneously? That concept faded when personal computers gave everyone their own dedicated machine, but AI workloads have created the perfect conditions for its resurrection.

Look, the dirty secret of AI development is that it's incredibly wasteful. Most machine learning workloads are bursty as hell—they need massive computational power during training but then sit idle during data preparation or evaluation phases. It's like renting a stadium that's only filled to capacity for twenty minutes of a four-hour event.

Having covered cloud infrastructure since the AWS dominance era began, I've seen this pattern before. Cloud providers are obsessed with maximizing utilization—it's literally their business model. What's new here is applying this efficiency mindset to the most constrained, expensive computing resource we've seen in decades.

The timing couldn't be more perfect (or desperate, depending on your perspective).

When Sam Altman is personally jetting around the globe trying to secure GPU allocations—and reportedly considering raising a trillion dollars for chip manufacturing—you know we're not in normal territory anymore. This is tech's version of the 1970s oil crisis, complete with rationing and wild price spikes.

Alibaba's system works by essentially hot-desking these precious GPUs. When one AI workload isn't maxing out its allocated chips, another job can swoop in and use the spare capacity. It's a bit like how airlines overbook flights knowing that some percentage of passengers won't show up... except in this case, nobody gets bumped to the next available H100.

The financial implications here are enormous—and complicated.

For cloud providers like Alibaba, this technology could dramatically improve margins while allowing them to serve more customers with existing hardware inventory. It's the computational equivalent of an all-you-can-eat buffet discovering most people only eat half a plate.

For Nvidia? Well... that's where things get interesting.

On one hand, anything that reduces chip demand should theoretically hurt their bottom line. But Jensen Huang (he of the iconic leather jacket and rockstar CEO status) has consistently preferred market expansion over maintaining artificial scarcity. If this approach makes AI more affordable for a wider range of companies, the total number of chips sold could actually increase.

I spent some time thinking about historical parallels, and the closest one might be virtualization in the early 2000s. When VMware made it possible to run multiple operating systems on a single server, hardware manufacturers initially panicked—until they realized it actually accelerated overall server adoption by making computing more flexible and cost-effective.

There's something almost poetic about finding efficiency in scarcity. While everyone else is frantically trying to secure more chips, Alibaba's approach suggests maybe we just need to use them better.

The geopolitical angle shouldn't be overlooked either. With U.S. export controls limiting China's access to Nvidia's most advanced AI accelerators, technologies that maximize the efficiency of existing or domestically produced chips take on strategic importance. This isn't just about cost savings—it's about technological sovereignty.

(Worth noting: I've tried to get specific technical details on exactly how Alibaba's system works, but the company's materials are frustratingly light on specifics. Classic enterprise tech marketing—big claims, vague implementations.)

The broader question this raises is whether the AI industry's current approach—throwing ever-increasing mountains of computing at ever-larger models—is sustainable or even sensible. It feels a bit like trying to build faster cars by simply adding more engines rather than improving aerodynamics or fuel efficiency.

Will Alibaba's innovation change the trajectory of AI development? Probably not on its own. But it points to a path that's increasingly attractive as the chip shortage continues—working smarter rather than just throwing more silicon at the problem.

In a world obsessed with securing more GPUs, there's something refreshingly practical about simply using the ones we have more intelligently. It's the computational equivalent of learning to pack for a vacation in a carry-on instead of checking three bags.

Now we just need to see if the rest of the industry follows suit... or if they're too busy writing purchase orders for chips they won't receive until 2025.